A Casual Take on the AI Doomsday Clock

Persistently Conceiving Baffling Apocalyptic Timers: The Constant Illusion of Disaster

Dive into the world of AI warnings with the AI Safety Clock, a new initiative from a Swiss business school backed by Saudi Arabia. When you think of Excel spreadsheet salespeople transforming their pitch into a warning about a god-like AI, you've got the gist. This novel alarm system was introduced by Michael Wade, a TONOMUS professor at IMD Business School in Lausanne, Switzerland, and Director of TONOMUS Global Center for Digital and AI Transformation. He shared his thoughts in a recent op-ed for TIME.

Once a powerful symbol from the atomic era, the ticking clock is now a worn-out metaphor. The Doomsday Clock, first introduced 75 years ago, is an image so antiquated it's recently enjoyed a birthday. After World War II, scientists who helped develop the atom bomb formed the Bulletin of the Atomic Scientists, with the Doomsday Clock serving as one of their tools to warn the world. Each year, experts gather to discuss the state of the world and set the clock accordingly. The closer it is to midnight, the closer humanity is to its demise. At the moment, it's set at a chilling 90 seconds to midnight.

Differentiating itself from the Bulletin's Doomsday Clock, Wade's creation keeps an eye on artificial intelligence dangers. "The Clock's current reading—29 minutes to midnight—is a measure of how close we are to the edge of uncontrolled AGI causing existential risks," Wade explained in his TIME piece. He cautions that while there haven't been any catastrophic incidents yet, the breakneck pace of AI development and regulatory intricacies mean everyone should remain vigilant.

Silicon Valley's fervent AI advocates love to weave nuclear metaphors. OpenAI CEO Sam Altman sees his company's work as akin to the Manhattan Project, while Senator Edward J. Markey (D-MA) likens it to America's rush for the atomic bomb. While there might be a hint of genuine fear, much of it is simply marketing hype.

As we venture deeper into the AI hype cycle, companies promise it could deliver unparalleled returns and vanquish labor costs. They claim machines will soon handle everything for us. The reality, however, is more complex. AI streamlines labor and production costs, shifting them elsewhere. Ignoring that, we focus on far-off threats like AI usurping humanity, when real-world problems like energy consumption, human labor exploitation in training, and unwanted AI-generated content are already apparent.

At a Tesla event, robot bartenders poured drinks, giving the impression they worked autonomously. However, a closer look reveals they were likely controlled remotely by humans, as LLMs burn through vast amounts of water and electricity to produce answers and rely heavily on human "trainers."

Meanwhile, fears of Skynet wiping out humanity overshadow the urgent issues right in front of us. The Bulletin offers a wealth of insightful articles every year about the real risks arising from nuclear weapons and new technologies, meticulously debunking excessive uncertainty around AI and bioweapons while encouraging meaningful conversations.

The Swiss AI clock lacks the scientific backing enjoyed by the Bulletin's Doomsday Clock, although it claims to monitor related articles. However, it does boast funding from Saudi Arabia through TONOMUS, a NEOM subsidiary, the visionary city Saudi Arabia aims to construct in the desert. Besides promises of robot dinosaurs, flying cars, and an artificial moon, NEOM's backers may be dreaming a little too big.

Understandably, I find it difficult to take the AI Safety Clock seriously.

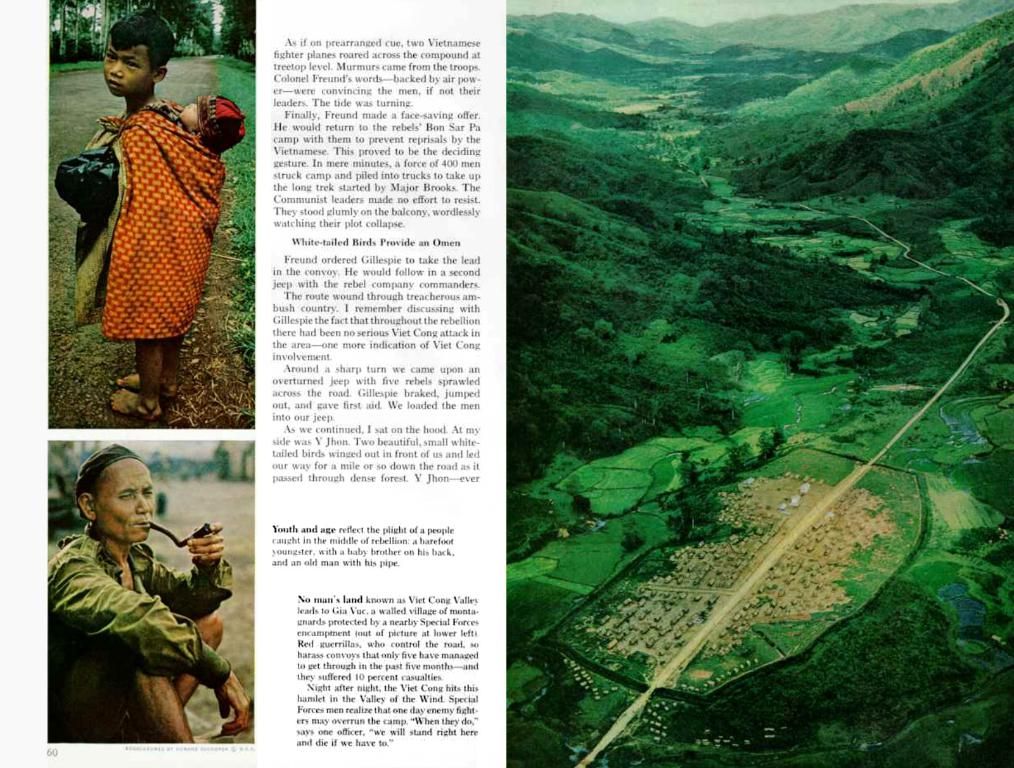

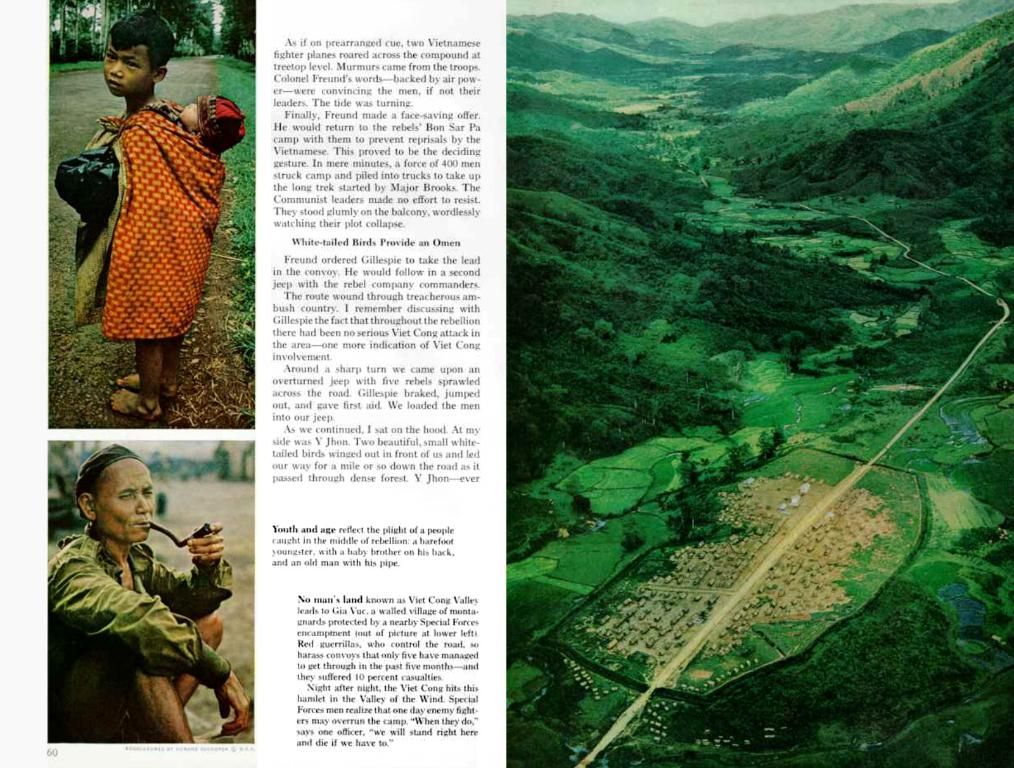

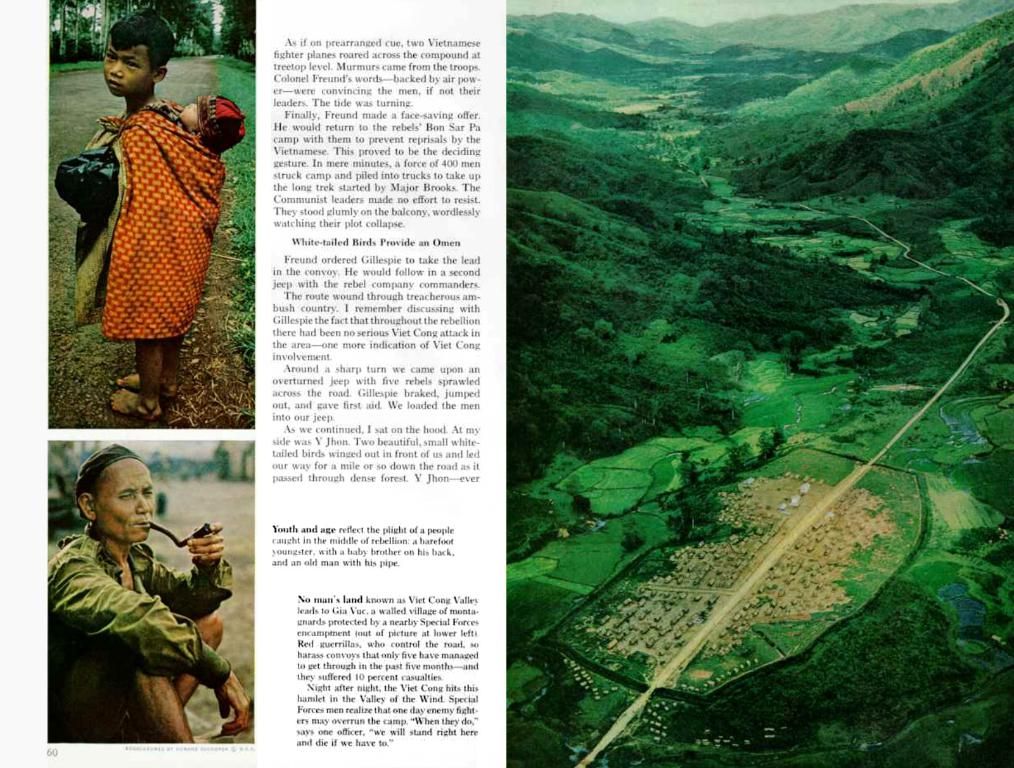

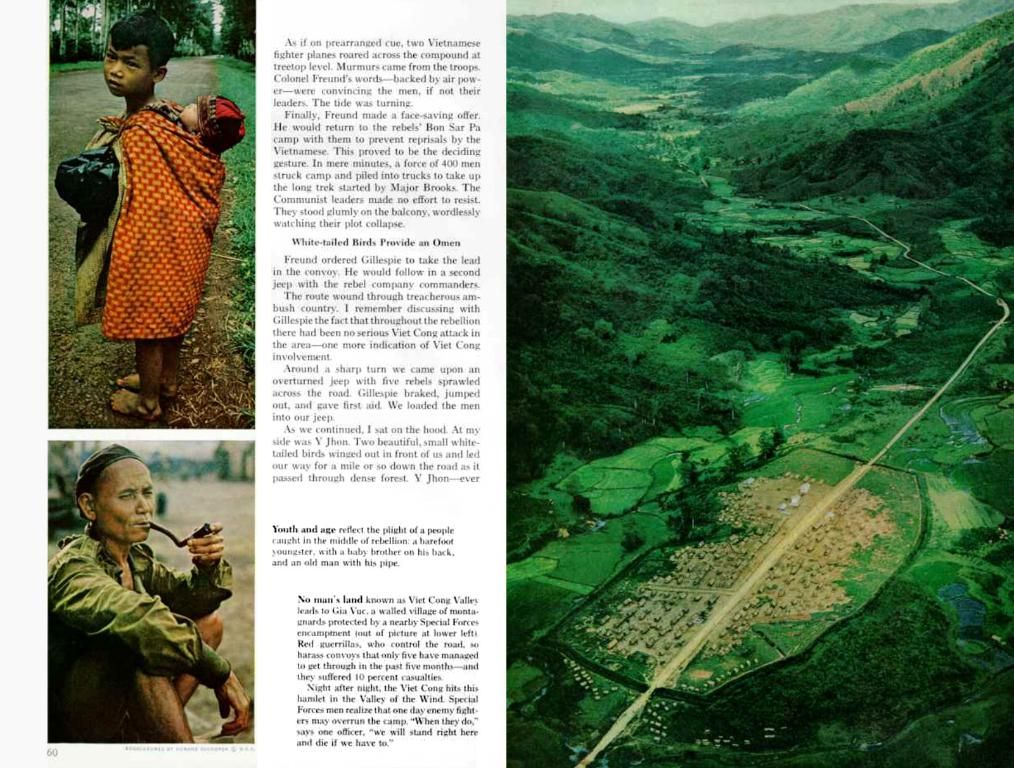

- The AI Safety Clock, a new initiative introduced by Michael Wade, is focused on modeling potential dangers posed by artificial intelligence, differentiating itself from the Bulletin's Doomsday Clock that warns about nuclear weapons.

- The tech industry's proponents often employ nuclear metaphors, such as OpenAI CEO Sam Altman comparing his work to the Manhattan Project, and Senator Edward J. Markey likening it to America's race for the atomic bomb.

- In contrast to the far-future threats like AI taking over humanity, real-world issues, such as energy consumption, human labor exploitation in training, and unwanted AI-generated content, are already apparent.

- The Swiss AI clock, despite claiming to monitor related articles, lacks the scientific backing enjoyed by the Bulletin's Doomsday Clock and its warnings about biosecurity and disarmament.