MIT experts aid in maintaining multirobot systems within the secure perimeter

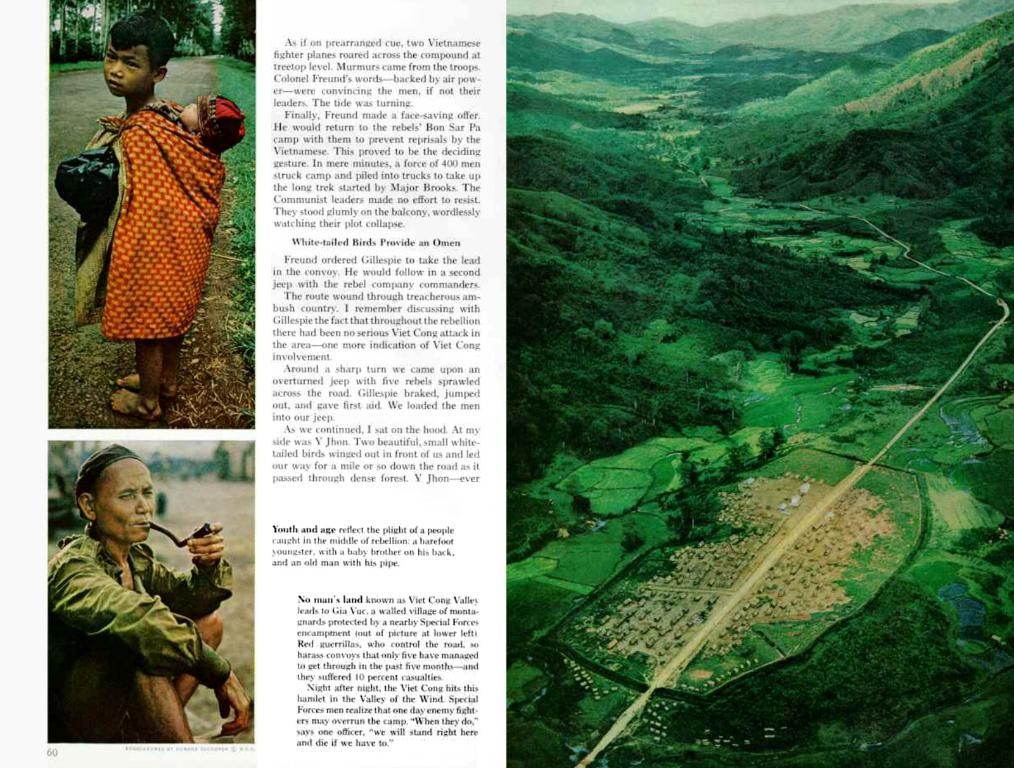

Drones shows, these big-time light displays in the sky, have become the talk of the town, thanks to the intricate patterns and shapes they form, courtesy of hundreds to thousands of these buzzing bots. But man, when things go awry, like they did in Florida, New York, or quite a few other places, they can turn into a real disaster for folks on the ground. It's all fun and games until someone's eye meets an errant drone, dig?

These drone mishaps showcase the bare-knuckle challenges of safety in what engineers call "multiagent systems", systems where multiple computers-controlled agents, such as robots, drones, and even self-driving cars, work together like a well-oiled machine.

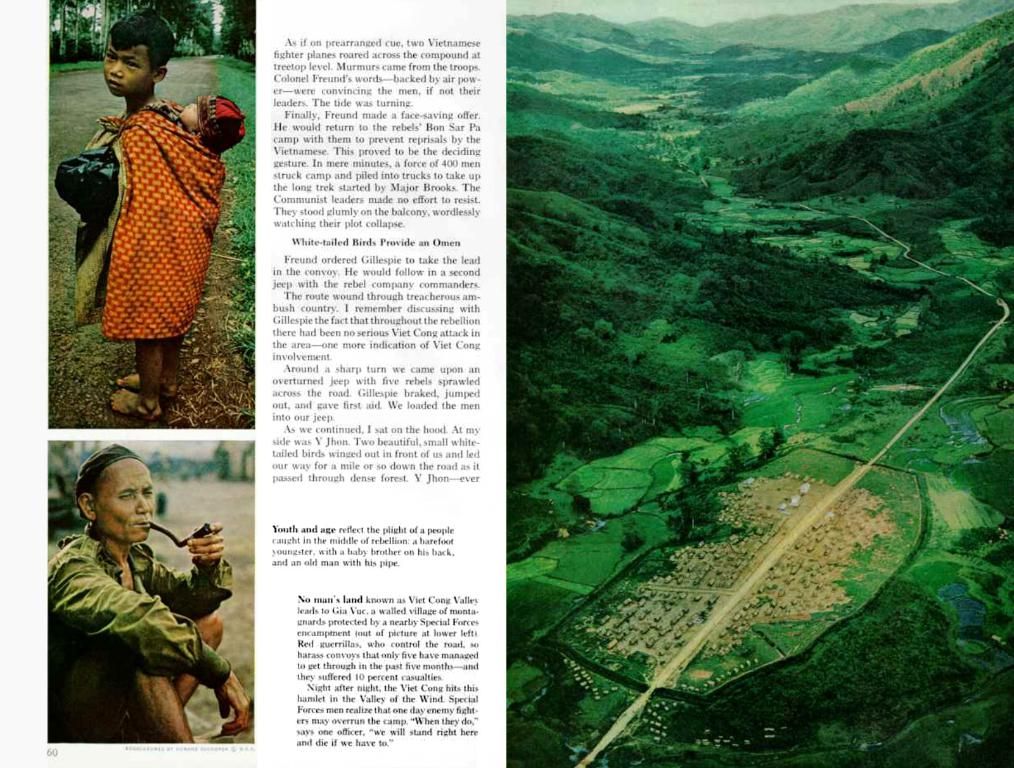

Thanks to a team of tech-savvy boffins from MIT, though, we might just see a safer future for these large-scale operations. They've cooked up a nifty training program that promises to ensure the safety of these multiagent systems in congested regions. The secret sauce? This training method learned the ropes from a small cluster of drones and uses that to teach larger numbers of bots to work safely in a coordinated manner. Neat, huh?

In actual tests, they managed to successfully train a bunch of palm-sized drones to play team games, like switching positions midflight and landing on moving vehicles on the ground without causing any pandemonium. In simulations, their magic formula performed like a charm, demonstrating the ability to scale up to thousands of drones, ensuring that big ol' swarms could perform the same smooth tricks as their tiny predecessors.

Although the lab rats in the MIT team didn't pull back the curtain on the specifics of how exactly they guarantee safety in crowded places, there's a general consensus on how you can ensure the safety of systems like these:

- Use game theory and machine learning to predict and control agent interactions, potentially avoiding dangerous dynamic situations;

- Run exhaustive simulations in controlled environments to identify and mitigate risks;

- Implement coordination mechanisms that encourage cooperation or competition among agents for safer interactions;

- Incorporate feedback loops and adaptive learning to respond to unforeseen scenarios, maintaining stability as you go.

With MIT's OpenCourseWare and other resources, you can dive deep into the world of multi-agent systems, learning the fundamentals that can be tailored to develop practical solutions for complex scenarios. Other frameworks like MARTI, from Tsinghua University, offer tools for scalable multi-agent systems using reinforcement learning. Cool, right? So, not only can multi-agent systems light up the sky, but they might just save our necks too!

- The research on multi-agent systems, such as drones and self-driving cars, at MIT aims to address safety concerns in crowded environments by using game theory, machine learning, exhaustive simulations, coordination mechanisms, feedback loops, and adaptive learning.

- The advancements in artificial intelligence and technology could lead to environmentally-friendly shows like drone displays becoming safer and more reliable, thanks to the development of training programs for these multi-agent systems, which could potentially prevent accidents and disasters.